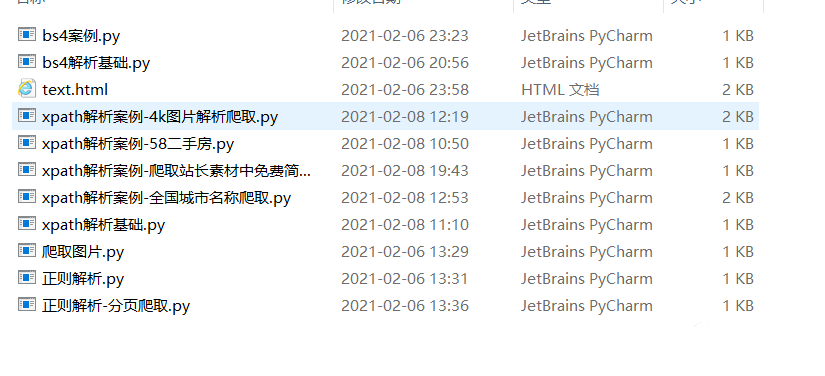

全网最全系统学习爬虫教程,用爬虫进行数据分析(bs4,xpath,正则表达式)

1.bs4解析基础

2.bs4案例

3.xpath解析基础

4.xpath解析案例-4k图片解析爬取

5.xpath解析案例-58二手房

6.xpath解析案例-爬取站长素材中免费简历模板

7.xpath解析案例-全国城市名称爬取

8.正则解析

9.正则解析-分页爬取

10.爬取图片

1.bs4解析基础

from bs4 import BeautifulSoup

fp = open('第三章 数据分析/text.html','r',encoding='utf-8')

soup = BeautifulSoup(fp,'lxml')

#print(soup)

#print(soup.a)

#print(soup.div)

#print(soup.find('div'))

#print(soup.find('div',class_="song"))

#print(soup.find_all('a'))

#print(soup.select('.tang'))

#print(soup.select('.tang > ul > li >a')[0].text)

#print(soup.find('div',class_="song").text)

#print(soup.find('div',class_="song").string)

print(soup.select('.tang > ul > li >a')[0]['href'])

2.bs4案例

from bs4 import BeautifulSoup

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = "http://sanguo.5000yan.com/"

page_text = requests.get(url ,headers = headers).content

#print(page_text)

soup = BeautifulSoup(page_text,'lxml')

li_list = soup.select('.list > ul > li')

fp = open('./sanguo.txt','w',encoding='utf-8')

for li in li_list:

title = li.a.string

#print(title)

detail_url = 'http://sanguo.5000yan.com/'+li.a['href']

print(detail_url)

detail_page_text = requests.get(detail_url,headers = headers).content

detail_soup = BeautifulSoup(detail_page_text,'lxml')

div_tag = detail_soup.find('div',class_="grap")

content = div_tag.text

fp.write(title+":"+content+'\n')

print(title,'爬取成功!!!')

3.xpath解析基础

from lxml import etree

tree = etree.parse('第三章 数据分析/text.html')

# r = tree.xpath('/html/head/title')

# print(r)

# r = tree.xpath('/html/body/div')

# print(r)

# r = tree.xpath('/html//div')

# print(r)

# r = tree.xpath('//div')

# print(r)

# r = tree.xpath('//div[@class="song"]')

# print(r)

# r = tree.xpath('//div[@class="song"]/P[3]')

# print(r)

# r = tree.xpath('//div[@class="tang"]//li[5]/a/text()')

# print(r)

# r = tree.xpath('//li[7]/i/text()')

# print(r)

# r = tree.xpath('//li[7]//text()')

# print(r)

# r = tree.xpath('//div[@class="tang"]//text()')

# print(r)

# r = tree.xpath('//div[@class="song"]/img/@src')

# print(r)

4.xpath解析案例-4k图片解析爬取

import requests

from lxml import etree

import os

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'http://pic.netbian.com/4kmeinv/'

response = requests.get(url,headers = headers)

#response.encoding=response.apparent_encoding

#response.encoding = 'utf-8'

page_text = response.text

tree = etree.HTML(page_text)

li_list = tree.xpath('//div[@class="slist"]/ul/li')

# if not os.path.exists('./picLibs'):

# os.mkdir('./picLibs')

for li in li_list:

img_src = 'http://pic.netbian.com/'+li.xpath('./a/img/@src')[0]

img_name = li.xpath('./a/img/@alt')[0]+'.jpg'

img_name = img_name.encode('iso-8859-1').decode('gbk')

# print(img_name,img_src)

# print(type(img_name))

img_data = requests.get(url = img_src,headers = headers).content

img_path ='picLibs/'+img_name

#print(img_path)

with open(img_path,'wb') as fp:

fp.write(img_data)

print(img_name,"下载成功")

5.xpath解析案例-58二手房

import requests

from lxml import etree

url = 'https://bj.58.com/ershoufang/p2/'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

page_text = requests.get(url=url,headers = headers).text

tree = etree.HTML(page_text)

li_list = tree.xpath('//section[@class="list-left"]/section[2]/div')

fp = open('58.txt','w',encoding='utf-8')

for li in li_list:

title = li.xpath('./a/div[2]/div/div/h3/text()')[0]

print(title)

fp.write(title+'\n')

6.xpath解析案例-爬取站长素材中免费简历模板

import requests

from lxml import etree

import os

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://www.aqistudy.cn/historydata/'

page_text = requests.get(url,headers = headers).text

7.xpath解析案例-全国城市名称爬取

import requests

from lxml import etree

import os

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36'

}

url = 'https://www.aqistudy.cn/historydata/'

page_text = requests.get(url,headers = headers).text

tree = etree.HTML(page_text)

# holt_li_list = tree.xpath('//div[@class="bottom"]/ul/li')

# all_city_name = []

# for li in holt_li_list:

# host_city_name = li.xpath('./a/text()')[0]

# all_city_name.append(host_city_name)

# city_name_list = tree.xpath('//div[@class="bottom"]/ul/div[2]/li')

# for li in city_name_list:

# city_name = li.xpath('./a/text()')[0]

# all_city_name.append(city_name)

# print(all_city_name,len(all_city_name))

#holt_li_list = tree.xpath('//div[@class="bottom"]/ul//li')

holt_li_list = tree.xpath('//div[@class="bottom"]/ul/li | //div[@class="bottom"]/ul/div[2]/li')

all_city_name = []

for li in holt_li_list:

host_city_name = li.xpath('./a/text()')[0]

all_city_name.append(host_city_name)

print(all_city_name,len(all_city_name))

8.正则解析

import requests

import re

import os

if not os.path.exists('./qiutuLibs'):

os.mkdir('./qiutuLibs')

url = 'https://www.qiushibaike.com/imgrank/'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4385.0 Safari/537.36'

}

page_text = requests.get(url,headers = headers).text

ex = '<div class="thumb">.*?<img src="(.*?)" alt.*?</div>'

img_src_list = re.findall(ex,page_text,re.S)

print(img_src_list)

for src in img_src_list:

src = 'https:' + src

img_data = requests.get(url = src,headers = headers).content

img_name = src.split('/')[-1]

imgPath = './qiutuLibs/'+img_name

with open(imgPath,'wb') as fp:

fp.write(img_data)

print(img_name,"下载完成!!!!!")

9.正则解析-分页爬取

import requests

import re

import os

if not os.path.exists('./qiutuLibs'):

os.mkdir('./qiutuLibs')

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4385.0 Safari/537.36'

}

url = 'https://www.qiushibaike.com/imgrank/page/%d/'

for pageNum in range(1,3):

new_url = format(url%pageNum)

page_text = requests.get(new_url,headers = headers).text

ex = '<div class="thumb">.*?<img src="(.*?)" alt.*?</div>'

img_src_list = re.findall(ex,page_text,re.S)

print(img_src_list)

for src in img_src_list:

src = 'https:' + src

img_data = requests.get(url = src,headers = headers).content

img_name = src.split('/')[-1]

imgPath = './qiutuLibs/'+img_name

with open(imgPath,'wb') as fp:

fp.write(img_data)

print(img_name,"下载完成!!!!!")

10.爬取图片

import requests

url = 'https://pic.qiushibaike.com/system/pictures/12404/124047919/medium/R7Y2UOCDRBXF2MIQ.jpg'

img_data = requests.get(url).content

with open('qiutu.jpg','wb') as fp:

fp.write(img_data)

关于Python技术储备

学好 Python 不论是就业还是做副业赚钱都不错,但要学会 Python 还是要有一个学习规划。最后大家分享一份全套的 Python 学习资料,给那些想学习 Python 的小伙伴们一点帮助!

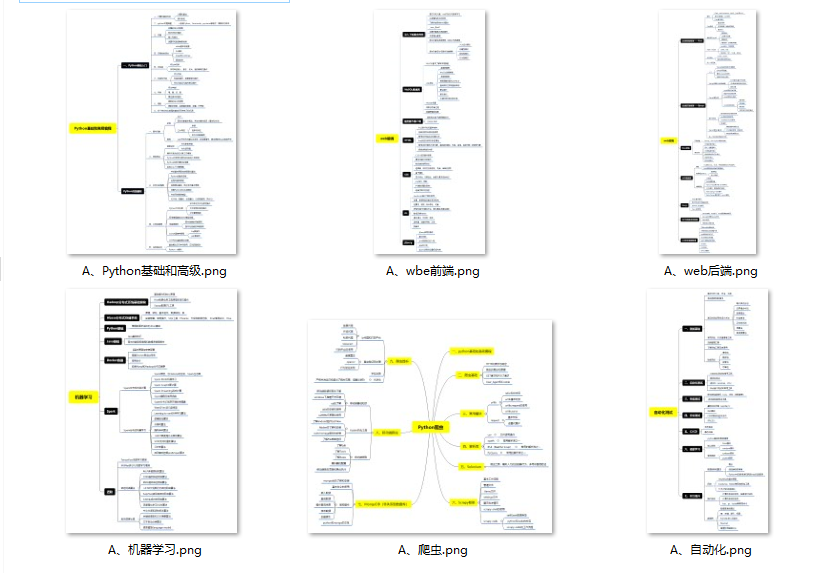

一、Python所有方向的学习路线

Python所有方向的技术点做的整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照上面的知识点去找对应的学习资源,保证自己学得较为全面。(文末获取!)

温馨提示:篇幅有限,已打包文件夹,获取方式在“文末”!!!

二、Python必备开发工具

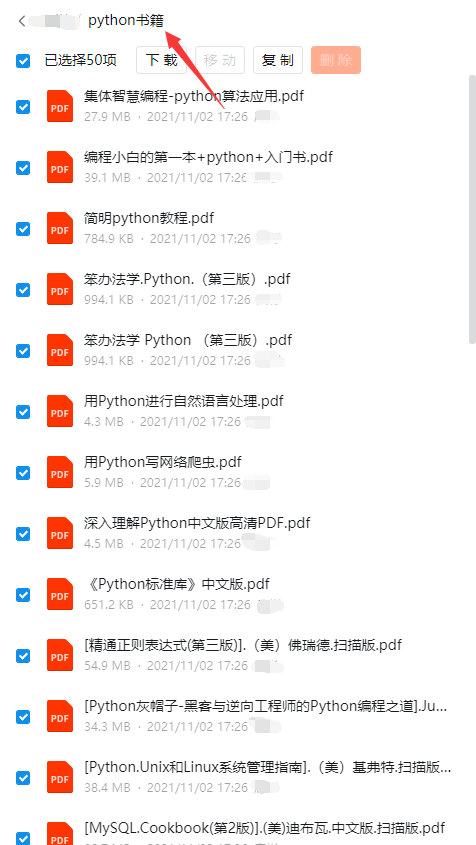

三、精品Python学习书籍

当我学到一定基础,有自己的理解能力的时候,会去阅读一些前辈整理的书籍或者手写的笔记资料,这些笔记详细记载了他们对一些技术点的理解,这些理解是比较独到,可以学到不一样的思路。

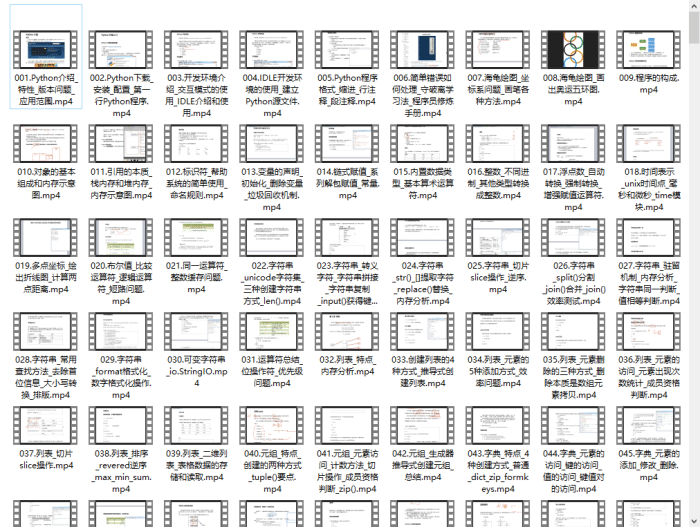

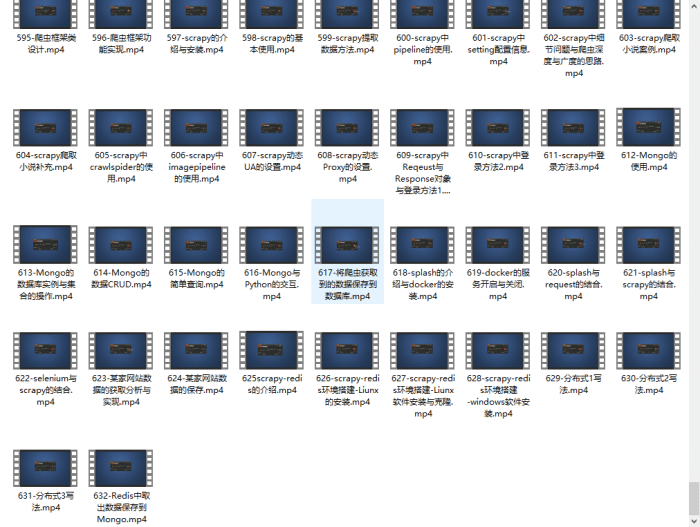

四、Python视频合集

观看零基础学习视频,看视频学习是最快捷也是最有效果的方式,跟着视频中老师的思路,从基础到深入,还是很容易入门的。

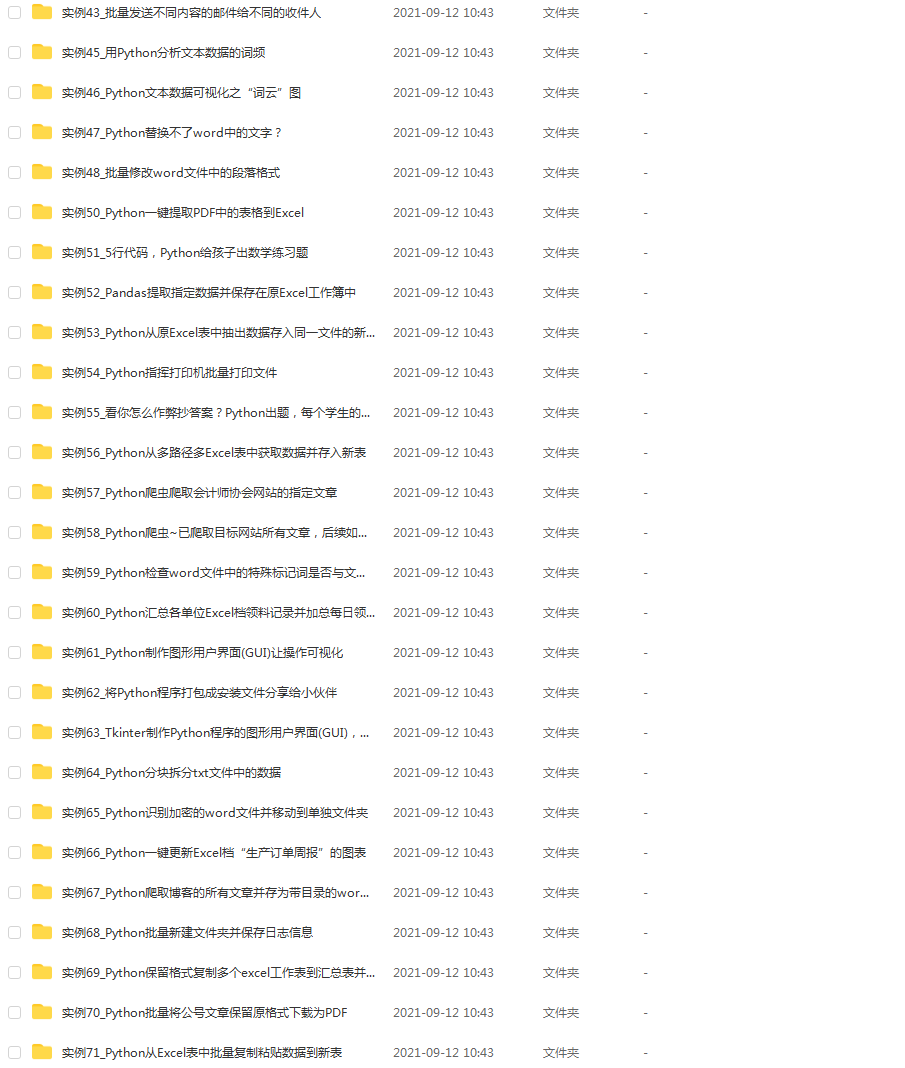

五、实战案例

光学理论是没用的,要学会跟着一起敲,要动手实操,才能将自己的所学运用到实际当中去,这时候可以搞点实战案例来学习。

六、Python练习题

检查学习结果。

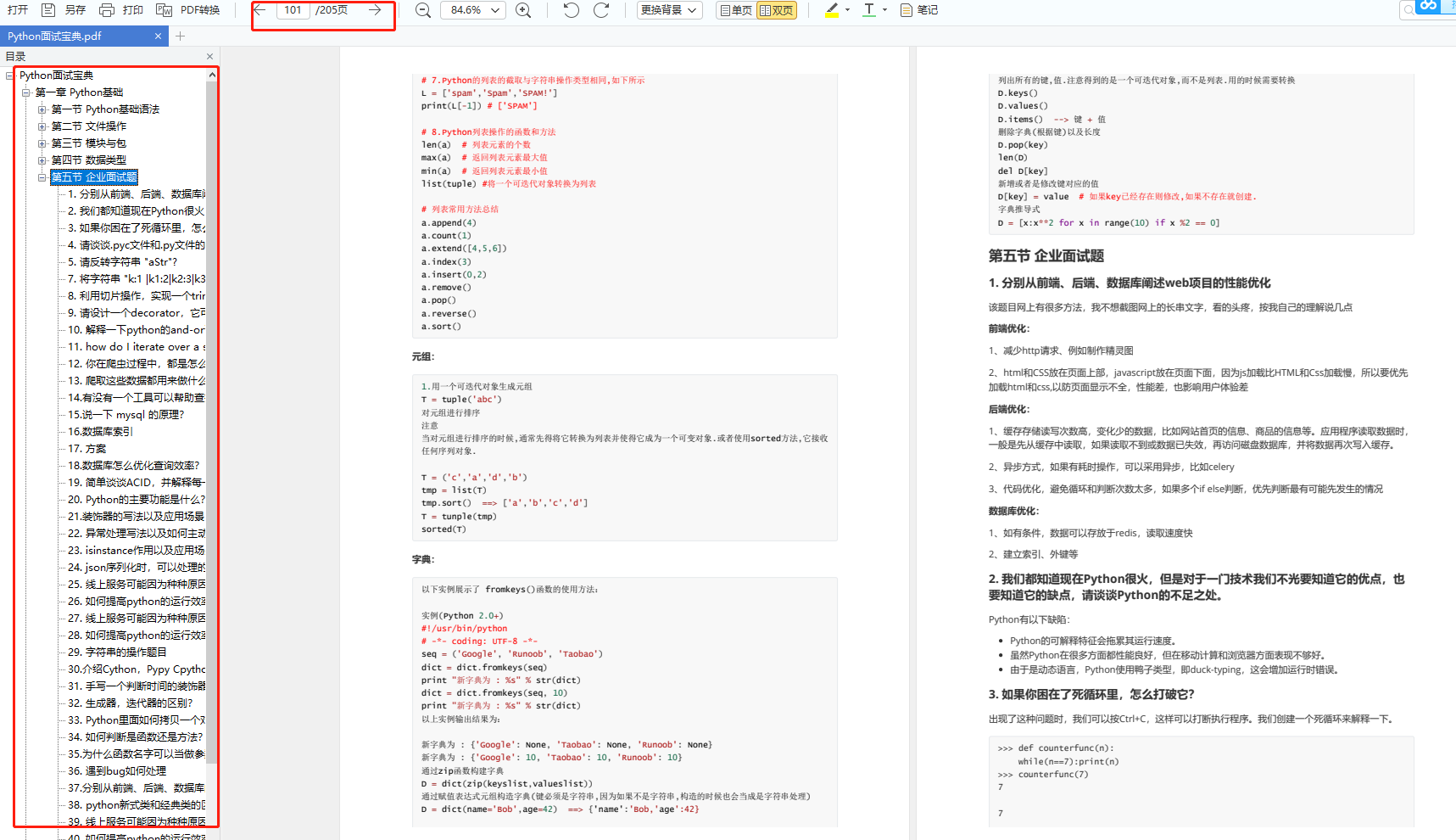

七、面试资料

我们学习Python必然是为了找到高薪的工作,下面这些面试题是来自阿里、腾讯、字节等一线互联网大厂最新的面试资料,并且有阿里大佬给出了权威的解答,刷完这一套面试资料相信大家都能找到满意的工作。

这份完整版的Python全套学习资料已经上传CSDN,朋友们如果需要可以微信扫描下方CSDN官方认证二维码免费领取【保证100%免费】

版权声明:本文为m0_59164304原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。