Pytorch基础(1)

Pytorch安装

这是torch1.6.0版本加了清华镜像源的安装,安装其他版本[click here]

pip install torch==1.6.0 torchvision==0.7.0 -f https://download.pytorch.org/whl/torch_stable.html -i https://pypi.tuna.tsinghua.edu.cn/simple some-package

查询torch版本/CUDA是否可用

import torch

import numpy as np

print(torch.__version__)

print(torch.cuda.is_available())

tensorboard的使用

tensorboard一般用来画loss下降图的,使用时候注意.py文件命名不要命名为tensorboard.py不然会报错

- 添加数据

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter("logs")

for i in range(100):

writer.add_scalar("y=x",2*i,i)

writer.close()

- 添加图片

from torch.utils.tensorboard import SummaryWriter

from PIL import Image

import numpy as np

writer = SummaryWriter("logs")

img_path = r"image\000007.jpg"

img_PIL = Image.open(img_path)

img_numpy = np.array(img_PIL)

# 添加图片

writer.add_image("test",img_numpy,0,dataformats="HWC")

- 显示绘制图像

1.pytorch最新版本可直接点击启动TensorBorad对话

2.在命令行终端(logs文件所在目录)执行,一般要改下端口,不然多人共用服务器训练时,共用一个端口就乱套了

(pytorch14) E:\workdir\pytorch_learning>tensorboard --logdir=logs --port=6007

3.在google colab中用tensorboad如果用上面的方法localhost会拒绝访问,这时应该用下面的方法

%load_ext tensorboard

%tensorboard --logdir=logs

- 清除日志

不需要的日志信息如果保留了,在绘图中会一并会出来,这样结果是错的,记得把不需要的log删除

格式变换transform

训练的图片/数据可能需要一些特定格式或者需要一些变换,通过transform可以实现这些变换

- ToTensor()

可以把numpy.array,PIL图片变换成tensor格式

from torchvision import transforms

tensor_trans = transforms.ToTensor()

img_tensor = tensor_trans(img_PIL)

- Normalize()归一化

trans_normalize = transforms.Normalize([0.5,0.5,0.5],[0.5 ,0.5, 0.5])

img_norm = trans_normalize(img_tensor)

writer.add_image("normalize",img_norm,2)

- Resize()

输入序列或整数,序列(512,512)表示图片尺寸,输入整数128.表示最小边调整为128个像素,同时保持纵横比不变

trans_resize = transforms.Resize((512,512))

trans_resize_2 = transforms.Resize((18))

- Compose()

结合多个变换操作

trans_compose = transforms.Compose([trans_tensor,

trans_resize_2])

- RandomCrop()

随机裁剪,输入二维序列是长方形,输入一个整数默认为正方形

trans_crop = transforms.RandomCrop(64)

for i in range(10):

img_crop = trans_crop(img_tensor)

writer.add_image("crop",img_crop,i)

writer.close()

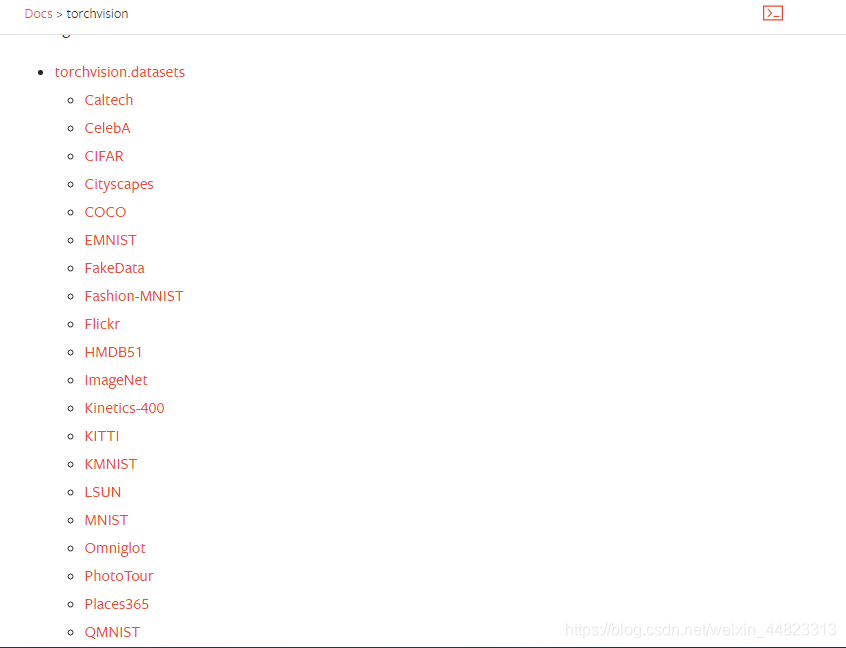

数据集Dataset

- 自建数据集

这个写在笔记本的jupyter notebook里面了 - torchvision中的数据集[>>click here]

这部分主要介绍如何下载数据集并将transforms与dataset一起使用

import torch

from torchvision import transforms

import torchvision

import os

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

dataset_trans = transforms.Compose([transforms.ToTensor()])

root_path = "/content/mydataset"

train_set = torchvision.datasets.CIFAR10(os.path.join(root_path,"train"),train=True,transform=dataset_trans,download=True)

test_set = torchvision.datasets.CIFAR10(os.path.join(root_path,"test"),train=False,transform=dataset_trans,download=True)

writer = SummaryWriter("logs")

for i in range(10):

img,target = train_set[i]

title = train_set.classes[target]

writer.add_image("pic",img,i)

writer.close()

tensorboard展示

%load_ext tensorboard

%tensorboard --logdir=logs

数据集加载DataLoader

DataLoader返回的是一个迭代器,第一个参数是图片张量,第二个是图片类型(targets)

from torch.utils.data import DataLoader

test_loader = DataLoader(dataset=test_set,batch_size=64,shuffle=True,num_workers=0,drop_last=False)

epochs = 3

for epoch in range(epochs):

step = 0

for data in test_loader:

imgs,targets = data

writer.add_images("Epoch {}".format(epoch),imgs,step)

step += 1

writer.close()

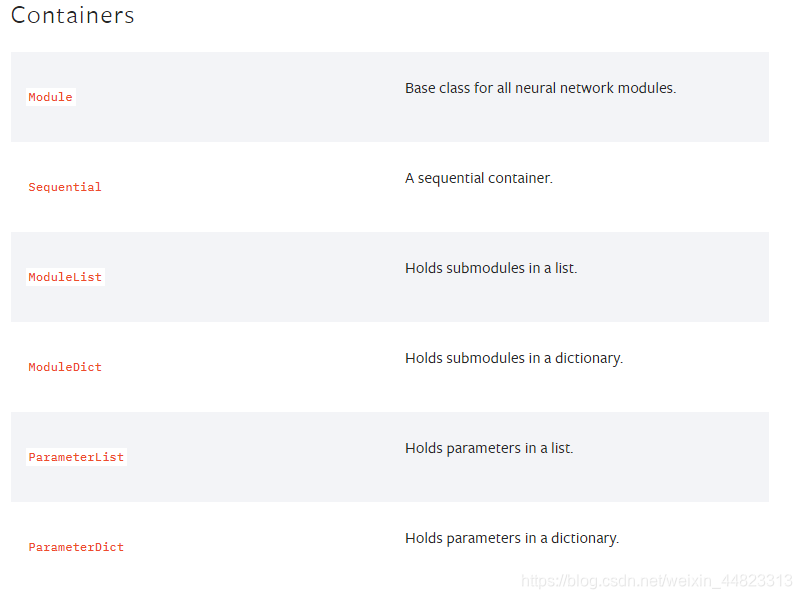

神经网络的搭建

神经网络的搭建很重要的是torch.nn模块,其中Continers模块中有几个非常常用的模块,比如Module,在我们自定义自己的模块时,我们都会继承这个模块,如下面的代码所示

我们可以在初始化函数中定义我们的卷积,池化操作,在forward()函数中定义的是信号的传播路径

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(3, 20, 5)

self.conv2 = nn.Conv2d(20, 3, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

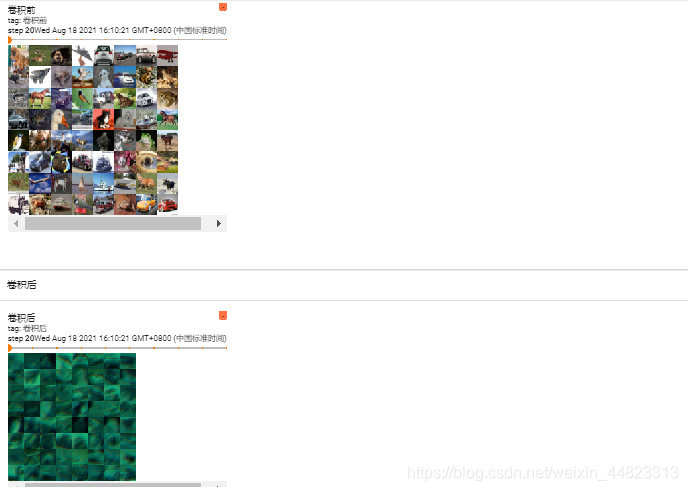

root_path = "/content/workdir"

test_set = torchvision.datasets.CIFAR10(root=root_path,train=False,transform=transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset=test_set,batch_size=64,shuffle=True)

net = Model()

step = 0

for data in dataloader:

imgs,targets = data

writer.add_images("卷积前",imgs,step)

y = net(imgs)

writer.add_images("卷积后",y,step)

step +=1

writer.close()

用tensorboard展示出卷积前后的两张图的变化

常用的几个模块

torch.flatten() 把特征图展平

nn.MaxPool2d() 池化

nn.Conv2d() 卷积

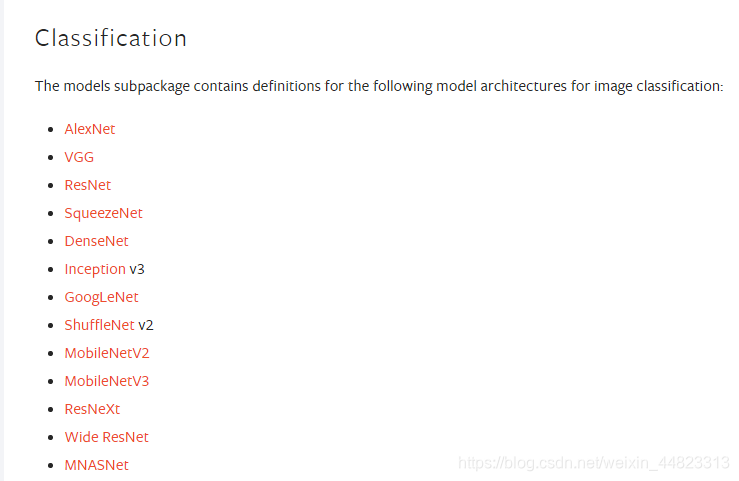

常用的模型

torchvision是图片,torchtext是文字,torchaudio是语音

训练神经网络

导入库

import torch.nn as nn

import torch.nn.functional as F

import torch

import torchvision

from torchvision.transforms import transforms

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torch.utils.data import DataLoader

writer = SummaryWriter("logs")

定义网络模型

class Model1(nn.Module):

def __init__(self):

super(Model1, self).__init__()

self.conv1 = nn.Conv2d(3, 20, 5)

self.conv2 = nn.Conv2d(20, 3, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

class Model2(nn.Module):

def __init__(self):

super(Model2, self).__init__()

self.conv1 = nn.Conv2d(3,32,5,padding=2)

self.maxpool1 = nn.MaxPool2d(2)

self.conv2 = nn.Conv2d(32,32,5,padding=2)

self.maxpool2 = nn.MaxPool2d(2)

self.conv3 = nn.Conv2d(32,64,5,padding=2)

self.maxpool3 = nn.MaxPool2d(2)

self.flatten = nn.Flatten()

self.linear1 = nn.Linear(1024,64)

self.linear2 = nn.Linear(64,10)

def forward(self,x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

x = self.flatten(x)

x = self.linear1(x)

x = self.linear2(x)

return x

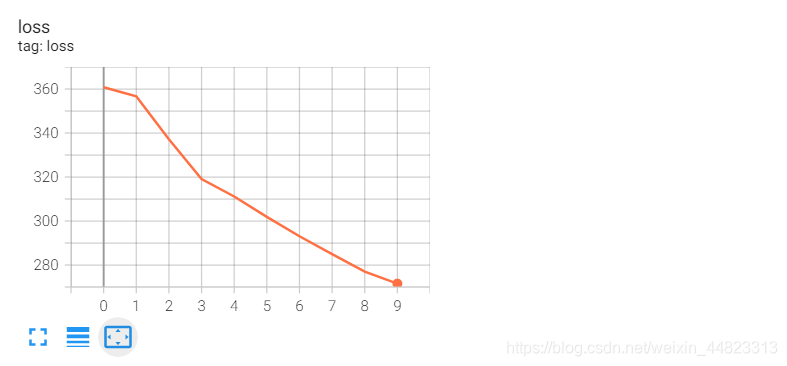

训练

root_path = "/content/workdir"

# train_set = torchvision.datasets.CIFAR10(root=root_path,train=True,transform=transforms.ToTensor(),download=True)

test_set = torchvision.datasets.CIFAR10(root=root_path,train=False,transform=transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset=test_set,batch_size=64,shuffle=True)

net = Model2()

loss_crossentropy = nn.CrossEntropyLoss()

optim = torch.optim.SGD(net.parameters(),0.01)

epochs = 10

for epoch in range(epochs):

running_loss = 0

for data in dataloader:

inputs,targets = data

outputs = net(inputs)

loss = loss_crossentropy(outputs,targets)#计算loss

optim.zero_grad()#反向传播前梯度要清零

loss.backward()#利用loss反向传播计算梯度

optim.step()#更新权重,偏置

running_loss += loss

writer.add_scalar("loss",running_loss,epoch)

print("Epoch:",{epoch}," loss:",{running_loss})

writer.close()

outputs:

Files already downloaded and verified

Epoch: {0} loss: {tensor(360.7612, grad_fn=<AddBackward0>)}

Epoch: {1} loss: {tensor(356.6532, grad_fn=<AddBackward0>)}

Epoch: {2} loss: {tensor(337.1882, grad_fn=<AddBackward0>)}

Epoch: {3} loss: {tensor(319.0812, grad_fn=<AddBackward0>)}

Epoch: {4} loss: {tensor(311.1434, grad_fn=<AddBackward0>)}

Epoch: {5} loss: {tensor(301.8529, grad_fn=<AddBackward0>)}

Epoch: {6} loss: {tensor(293.0782, grad_fn=<AddBackward0>)}

Epoch: {7} loss: {tensor(284.9710, grad_fn=<AddBackward0>)}

Epoch: {8} loss: {tensor(276.9984, grad_fn=<AddBackward0>)}

Epoch: {9} loss: {tensor(271.5492, grad_fn=<AddBackward0>)}

版权声明:本文为weixin_44823313原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。