kafka总结

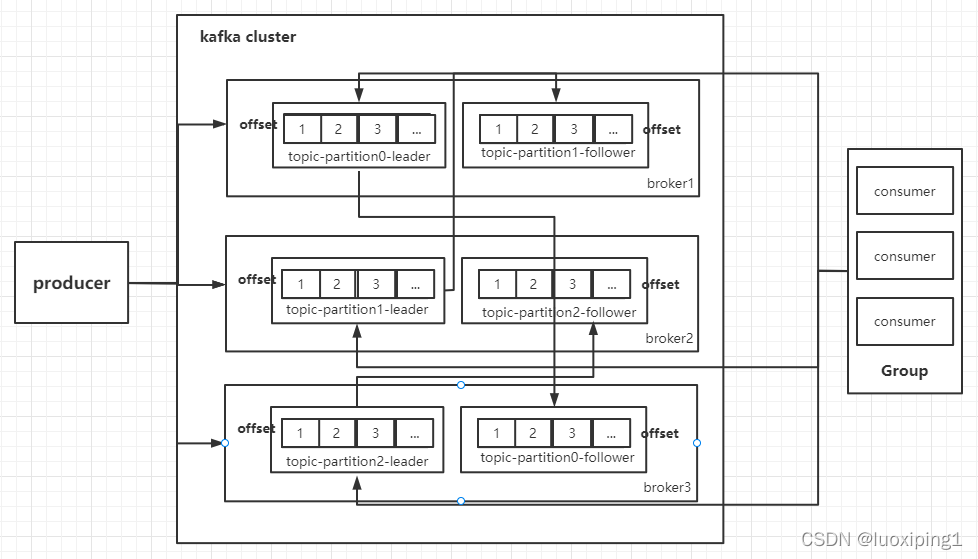

kafka架构图

kafka message有3部分组成

1.offset 表示message在partition中的偏移量 是一个逻辑上的值 唯一确定了Partition中的一条message

可以简单的认为是一个id

2.MessageSize 表示message内容data的大小

3.data message的具体内容

message通过topic进行分类

在整个kafka架构中,生产者和消费者采用发布和订阅的模式 生产者生产消息 消费者消费消息

他两各司其职 并且都是面向topic

topic是一个逻辑上的概念 partition是一个物理上的概念 每个Partition对应一个log文件

该log文件中存储的就是Producer生产的数据

Producer生产的数据会不断的追加到log文件末端 且每条数据都有自己的offset

消费者组中的每一个消费者 都会实时记录自己消费到了哪个offset 这样当出现故障并恢复后

可以从这个offset位置继续进行消费 避免漏掉数据或者重复消费

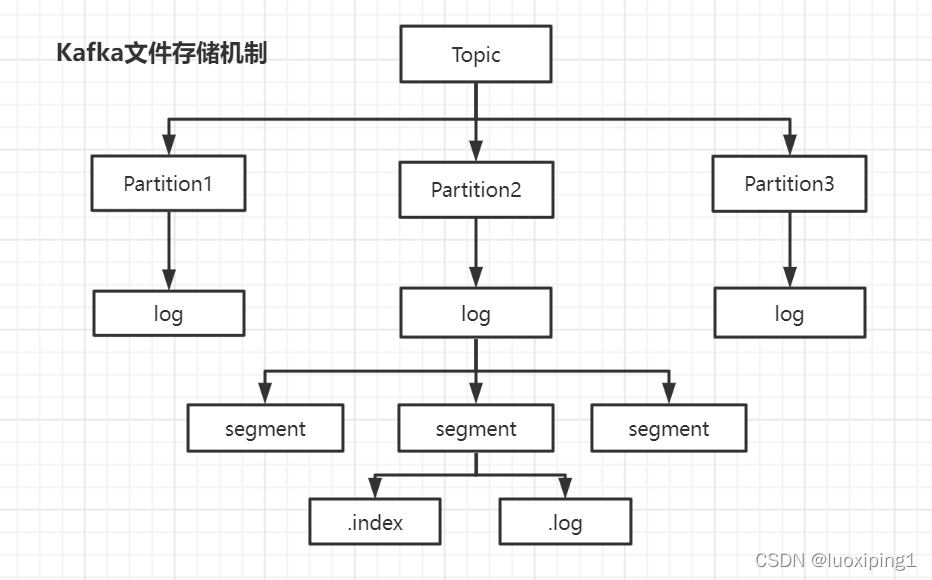

文件存储机制

文件存储结构及命名规则

在kafka设计之初 考虑到生产者生产的消息不断追加到log文件末尾后导致log文件过大的情况

所以采用了分片和索引机制 具体来说就是将每个partition分为多个segment 每个segment对应

三个文件 .index .log .timeindex(早起版本中没有) 其中.index .log文件位于一个文件夹下

该文件夹的命名规则为 topic名称+分区序号

例如gzlTopic这个topic有两个分区 则对应的文件夹为 gzlTopic-0,gzlTopic-1

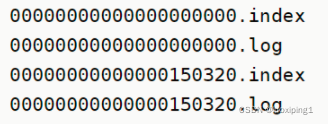

文件夹下的文件如截图

通过这个文件夹下有两个log,我们可以得出结论 这个partition有2个segment

kafka存储机制图

文件关系

index文件和log文件的关系

index文件存储大量的索引信息

log文件存储大量的数据

索引文件中的元数据指向对应数据文件中的message的物理偏移地址

使用offset查找message

每一个segment的文件名是上一个segment最后一条消息的offset 所以当需要查找一个指定offset

的message 通过在所有segment文件名中进行二分查找就能找到他归属的segment

再在其index文件中找到其对应到log文件上的物理位置,就能拿到message

kafka client核心API

AdminClient API

允许管理和检测Topic、broker以及其它kafka对象

Producer API

发布消息到1个或多个topic

Consumer API

订阅一个或多个topic,并处理产生的消息

Streams API

高效地将输入流转换到输出流

Connector API

从一些源系统或应用程序中拉取数据到kafka

实操

Topic创建和管理

1)创建Topic

/*

创建Topic实例

*/

public static void createTopic() {

AdminClient adminClient = adminClient();

// 副本因子

Short rs = 1;

NewTopic newTopic = new NewTopic(TOPIC_NAME, 1 , rs);

CreateTopicsResult topics = adminClient.createTopics(Arrays.asList(newTopic));

System.out.println("CreateTopicsResult : "+ topics);

}

/*

设置AdminClient

*/

public static AdminClient adminClient(){

Properties properties = new Properties();

properties.setProperty(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.220.128:9092");

AdminClient adminClient = AdminClient.create(properties);

return adminClient;

}

Producer生产者实操

/*

Producer异步发送带回调函数和Partition负载均衡

*/

public static void producerSendWithCallbackAndPartition(){

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.220.128:9092");

properties.put(ProducerConfig.ACKS_CONFIG,"all");

properties.put(ProducerConfig.RETRIES_CONFIG,"0");

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,"16384");

properties.put(ProducerConfig.LINGER_MS_CONFIG,"1");

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,"33554432");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.PARTITIONER_CLASS_CONFIG,"com.imooc.jiangzh.kafka.producer.SamplePartition");

// Producer的主对象

Producer<String,String> producer = new KafkaProducer<>(properties);

// 消息对象 - ProducerRecoder

for(int i=0;i<10;i++){

ProducerRecord<String,String> record =

new ProducerRecord<>(TOPIC_NAME,"key-"+i,"value-"+i);

producer.send(record, new Callback() {

@Override

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

System.out.println(

"partition : "+recordMetadata.partition()+" , offset : "+recordMetadata.offset());

}

});

}

// 所有的通道打开都需要关闭

producer.close();

}

/*

Producer异步发送带回调函数

*/

public static void producerSendWithCallback(){

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.220.128:9092");

properties.put(ProducerConfig.ACKS_CONFIG,"all");

properties.put(ProducerConfig.RETRIES_CONFIG,"0");

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,"16384");

properties.put(ProducerConfig.LINGER_MS_CONFIG,"1");

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,"33554432");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

// Producer的主对象

Producer<String,String> producer = new KafkaProducer<>(properties);

// 消息对象 - ProducerRecoder

for(int i=0;i<10;i++){

ProducerRecord<String,String> record =

new ProducerRecord<>(TOPIC_NAME,"key-"+i,"value-"+i);

producer.send(record, new Callback() {

@Override

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

System.out.println(

"partition : "+recordMetadata.partition()+" , offset : "+recordMetadata.offset());

}

});

}

// 所有的通道打开都需要关闭

producer.close();

}

/*

Producer异步

*/

public static void producerSend(){

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.220.128:9092");

properties.put(ProducerConfig.ACKS_CONFIG,"all");

properties.put(ProducerConfig.RETRIES_CONFIG,"0");

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,"16384");

properties.put(ProducerConfig.LINGER_MS_CONFIG,"1");

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,"33554432");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

// Producer的主对象

Producer<String,String> producer = new KafkaProducer<>(properties);

// 消息对象 - ProducerRecoder

for(int i=0;i<10;i++){

ProducerRecord<String,String> record =

new ProducerRecord<>(TOPIC_NAME,"key-"+i,"value-"+i);

producer.send(record);

}

// 所有的通道打开都需要关闭

producer.close();

}

/*

Producer异步阻塞发送

*/

public static void producerSyncSend() throws ExecutionException, InterruptedException {

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.220.128:9092");

properties.put(ProducerConfig.ACKS_CONFIG,"all");

properties.put(ProducerConfig.RETRIES_CONFIG,"0");

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,"16384");

properties.put(ProducerConfig.LINGER_MS_CONFIG,"1");

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,"33554432");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

// Producer的主对象

Producer<String,String> producer = new KafkaProducer<>(properties);

// 消息对象 - ProducerRecoder

for(int i=0;i<10;i++){

String key = "key-"+i;

ProducerRecord<String,String> record =

new ProducerRecord<>(TOPIC_NAME,key,"value-"+i);

Future<RecordMetadata> send = producer.send(record);

RecordMetadata recordMetadata = send.get();

System.out.println(key + "partition : "+recordMetadata.partition()+" , offset : "+recordMetadata.offset());

}

// 所有的通道打开都需要关闭

producer.close();

}

Consumer消费者实操

/*

工作里这种用法,有,但是不推荐

*/

private static void helloworld(){

Properties props = new Properties();

props.setProperty("bootstrap.servers", "192.168.220.128:9092");

props.setProperty("group.id", "test");

props.setProperty("enable.auto.commit", "true");

props.setProperty("auto.commit.interval.ms", "1000");

props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String,String> consumer = new KafkaConsumer(props);

// 消费订阅哪一个Topic或者几个Topic

consumer.subscribe(Arrays.asList(TOPIC_NAME));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000));

for (ConsumerRecord<String, String> record : records)

System.out.printf("patition = %d , offset = %d, key = %s, value = %s%n",

record.partition(),record.offset(), record.key(), record.value());

}

}

/*

手动提交offset

*/

private static void commitedOffset() {

Properties props = new Properties();

props.setProperty("bootstrap.servers", "192.168.220.128:9092");

props.setProperty("group.id", "test");

props.setProperty("enable.auto.commit", "false");

props.setProperty("auto.commit.interval.ms", "1000");

props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer(props);

// 消费订阅哪一个Topic或者几个Topic

consumer.subscribe(Arrays.asList(TOPIC_NAME));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000));

for (ConsumerRecord<String, String> record : records) {

// 想把数据保存到数据库,成功就成功,不成功...

// TODO record 2 db

System.out.printf("patition = %d , offset = %d, key = %s, value = %s%n",

record.partition(), record.offset(), record.key(), record.value());

// 如果失败,则回滚, 不要提交offset

}

// 如果成功,手动通知offset提交

consumer.commitAsync();

}

}

/*

手动提交offset,并且手动控制partition

*/

private static void commitedOffsetWithPartition() {

Properties props = new Properties();

props.setProperty("bootstrap.servers", "192.168.220.128:9092");

props.setProperty("group.id", "test");

props.setProperty("enable.auto.commit", "false");

props.setProperty("auto.commit.interval.ms", "1000");

props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer(props);

// 消费订阅哪一个Topic或者几个Topic

consumer.subscribe(Arrays.asList(TOPIC_NAME));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000));

// 每个partition单独处理

for(TopicPartition partition : records.partitions()){

List<ConsumerRecord<String, String>> pRecord = records.records(partition);

for (ConsumerRecord<String, String> record : pRecord) {

System.out.printf("patition = %d , offset = %d, key = %s, value = %s%n",

record.partition(), record.offset(), record.key(), record.value());

}

long lastOffset = pRecord.get(pRecord.size() -1).offset();

// 单个partition中的offset,并且进行提交

Map<TopicPartition, OffsetAndMetadata> offset = new HashMap<>();

offset.put(partition,new OffsetAndMetadata(lastOffset+1));

// 提交offset

consumer.commitSync(offset);

System.out.println("=============partition - "+ partition +" end================");

}

}

}

/*

手动提交offset,并且手动控制partition,更高级

*/

private static void commitedOffsetWithPartition2() {

Properties props = new Properties();

props.setProperty("bootstrap.servers", "192.168.220.128:9092");

props.setProperty("group.id", "test");

props.setProperty("enable.auto.commit", "false");

props.setProperty("auto.commit.interval.ms", "1000");

props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer(props);

// jiangzh-topic - 0,1两个partition

TopicPartition p0 = new TopicPartition(TOPIC_NAME, 0);

TopicPartition p1 = new TopicPartition(TOPIC_NAME, 1);

// 消费订阅哪一个Topic或者几个Topic

// consumer.subscribe(Arrays.asList(TOPIC_NAME));

// 消费订阅某个Topic的某个分区

consumer.assign(Arrays.asList(p0));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000));

// 每个partition单独处理

for(TopicPartition partition : records.partitions()){

List<ConsumerRecord<String, String>> pRecord = records.records(partition);

for (ConsumerRecord<String, String> record : pRecord) {

System.out.printf("patition = %d , offset = %d, key = %s, value = %s%n",

record.partition(), record.offset(), record.key(), record.value());

}

long lastOffset = pRecord.get(pRecord.size() -1).offset();

// 单个partition中的offset,并且进行提交

Map<TopicPartition, OffsetAndMetadata> offset = new HashMap<>();

offset.put(partition,new OffsetAndMetadata(lastOffset+1));

// 提交offset

consumer.commitSync(offset);

System.out.println("=============partition - "+ partition +" end================");

}

}

}版权声明:本文为luoxiping1原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。